Artifiсiаl Intelligenсe

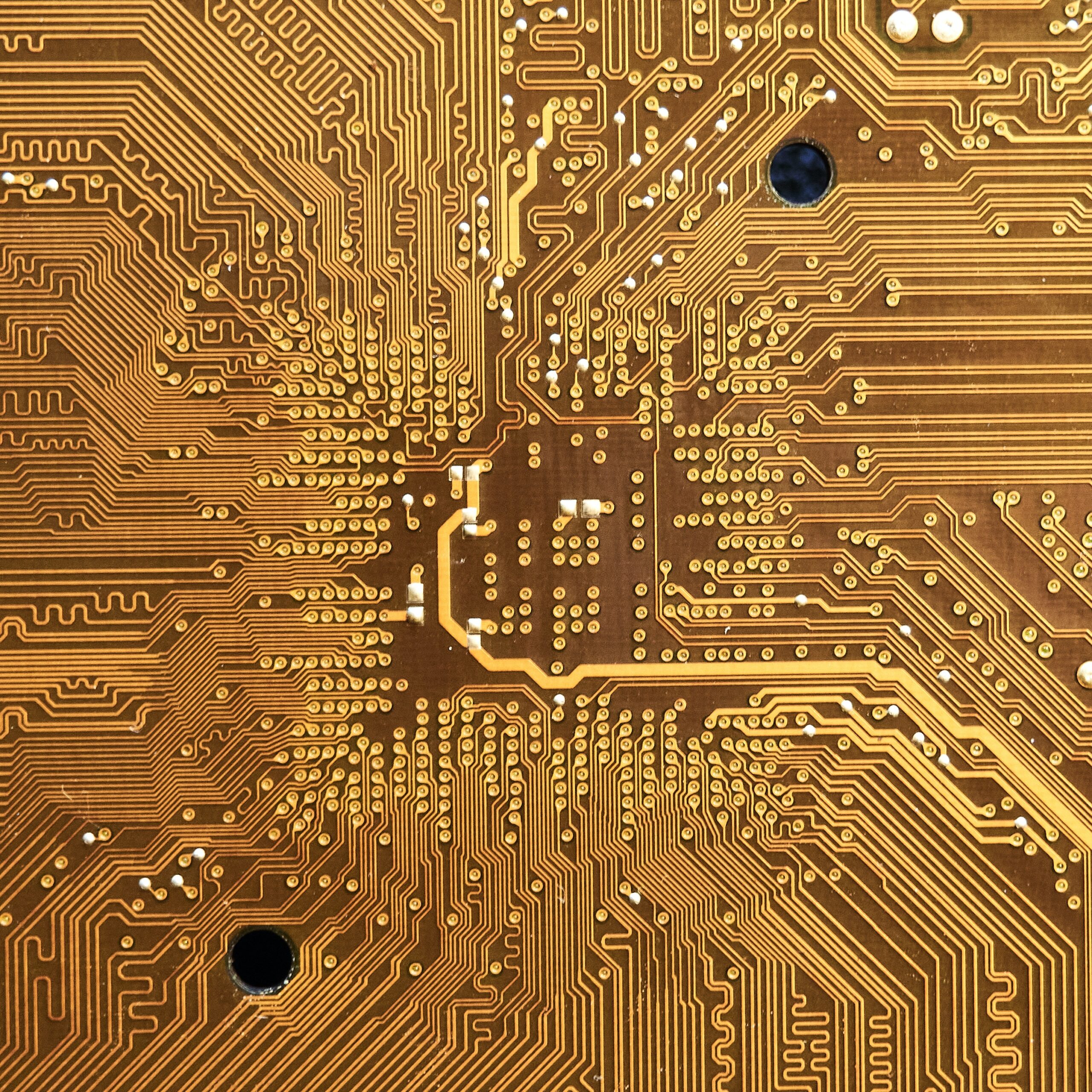

On Data Engineering Projects, This Is Your Mind

Data engineering is a growing field that combines data science and engineering disciplines to extract knowledge from large datasets. Data engineering projects are becoming increasingly important for organizations looking to leverage the power of machine learning, artificial intelligence, and analytics. By understanding the fundamentals of data engineering, businesses can unlock the potential of their data and use it to improve their products and services. In this article, we will discuss the basics of data engineering projects and their importance in today’s digital landscape.

Data Engineering Projects

Data engineering projects are an emerging trend in the tech world. Business leaders, scientists, and engineers are all harnessing data to create innovative products and services. Data engineering is the process of collecting and processing large volumes of data from various sources in order to extract meaningful insights that can be used for decision-making. Knowing what goes into a successful data engineering project will help you understand how to best tackle your unique challenges.

This article dives deep into the world of data engineering projects, exploring the key components necessary for success. We discuss common challenges faced by those new to this area, as well as strategies for overcoming those barriers. We also look at ways to use different types of technology such as machine learning algorithms and cloud computing solutions to maximize results from your project. Finally, we provide resources you can utilize when launching or maintaining a successful data engineering endeavor.

Steps to Successful Data Engineering Projects

Data engineering projects are becoming increasingly important in the world of technology and innovation. From businesses to government agencies, organizations across the globe are investing in data engineering projects to gain insights into their products, processes, and customers. To ensure successful outcomes for these projects, it is essential that project teams build a strong foundation before beginning development.

A key step in any data engineering project is understanding the problem at hand. Assemble a team that has a deep knowledge of the domain and its associated problems and challenges. Identify exactly what you want to accomplish with your project and what data sets you need to make it happen. This will help you understand how much time, effort, and resources are required for success.

Once you have identified the scope of your project, create detailed plans for each stage of development. Once plans are in place, you can begin working on the infrastructure and tools needed to support end-to-end data engineering.

Establish a data governance process If your organization does not have a formal data governance process in place, it is time to put one in action.

Developing an Effective Data Model

Data engineering projects are becoming increasingly important as data-driven solutions become a core component of business operations. Developing an effective data model is essential for successful outcomes in these projects. An effective data model should provide the necessary analysis and insights to support decision-making and facilitate efficient integration with other systems.

The purpose of the data model is to structure data so that it can be effectively queried, summarized, and processed. It should provide a unified view of the organization’s current information architecture and enable quick access to relevant information from multiple sources. A well-designed model should also align with industry standards, ensure scalability, and support complex analytics requirements.

Organizations must ensure that their models meet these criteria if they want their projects to be successful over time. 5.4 Data quality Organizations can use data and data modeling to improve their internal processes and customer service. However, if the underlying data are not accurate or complete, these efforts will be wasted. For example, using incomplete customer information to make business decisions is a waste of time and money.

Using Automation for Tasks

Using automation for tasks is an effective way to streamline the work process and save time. Automation can be applied to a variety of data engineering projects, from obtaining data sources to cleaning up messy datasets. It can even help with organizing large volumes of data and creating a unified structure that makes it easier to analyze.

In today’s data-driven world, automation is becoming increasingly important when it comes to streamlining processes and making sure that all the various components work together as one cohesive unit. Automation helps ensure accuracy in core operations such as collecting, cleaning, validating, transforming, and storing data. By instituting automated processes, businesses can save time and ensure more efficient outcomes — all while freeing up resources for other important tasks.

Tools for Cleaning and Preparing Data

Data engineering projects are often complex and require a lot of work. Cleaning and preparing data is a big part of the process, so it’s important to have the right tools for the job. There are many options available that are designed to help streamline data preparation tasks, making it easier to get the job done efficiently and accurately.

One example of such a tool is an ETL (extract, transform, and load) software program. This type of program can be used to extract data from multiple sources, clean it up by removing incorrect or inconsistent records, convert formats as necessary, join different sets together as needed and then load the results into a destination database or file system. It can greatly reduce manual labor in these types of tasks while still providing quality results. Another useful tool is an analytics platform that can provide insights into underlying trends in your data set.

Leveraging ETL Frameworks

Data engineering projects can be daunting and complex, but leveraging ETL frameworks can help streamline the process. An Extract-Transform-Load (ETL) framework is a set of practices, techniques, and processes designed to simplify data integration tasks. It helps businesses acquire, cleanse and organize data from multiple sources so that it can be used effectively for reporting, analytics, and other applications.

With an ETL framework, businesses benefit from improved scalability and performance while reducing overall costs associated with manual coding or scripting. The framework also provides visibility into the underlying systems enabling organizations to make better-informed decisions about their data pipelines. In addition, many of these frameworks have automated testing capabilities that allow users to quickly detect errors or inconsistencies in their code before deploying it in production.

Conclusion: Working Mindfully on Data Engineering Projects

Data engineering projects can be challenging for many reasons, but having a mindful approach to the task can help alleviate some of the stress. Working mindfully involves understanding, problem-solving, and attention to detail. In conclusion, it’s important to recognize that working mindfully on data engineering projects means taking the time to understand the project from all angles and applying problem-solving techniques and attention to detail when appropriate. This approach will yield better results in less time than just rushing through a job without thinking it through properly. Taking a mindful approach won’t only lead to more successful projects; it will also ensure that you don’t become overwhelmed by huge tasks or miss important steps along the way. Mindful data engineering is an effective way to get your project done quickly and accurately while avoiding burnout and frustration.

Artifiсiаl Intelligenсe

Beyond Passwords: Your Digital Self in the Age of Blockchain (Clickbait: Ditch Passwords Forever! This Tech Will Secure Your Online Life)

Beyond Passwords: Your Digital Self in the Age of Blockchain (Clickbait: Ditch Passwords Forever! This Tech Will Secure Your Online Life)

Tired of password Purgatory? Enter the Blockchain Oasis.

Remember that frantic morning scramble, brain aching for the elusive password to your bank account?

Or the sinking feeling when “incorrect password” flashes back, mocking your best guess?

We’ve all been there, prisoners of our own digital fortresses, locked out by the very keys meant to protect us. But what if we told you there’s a better way, a passwordless paradise called blockchain technology?

Think of it as your digital Swiss bank account, secure and impregnable.

Unlike the flimsy walls of traditional passwords, easily breached by hackers and cracked by brute force, blockchain builds fortresses with ironclad cryptography.

Your data, from medical records to online purchases, isn’t hoarded by corporations or vulnerable to centralized attacks.

Instead, it lives on a distributed ledger, a communal vault encrypted across thousands of computers, each one a guardian vigilantly watching over your digital self.

This isn’t just about convenience (though ditching those sticky notes is a major perk!). This is about ownership and control.

Blockchain empowers you to be the gatekeeper of your digital life. You decide what information you share, with whom, and for how long.

No more Big Brother data slurping or shady companies monetizing your privacy. You become the sovereign ruler of your own digital kingdom, wielding the keys to unlock or deny entry.

Imagine accessing your healthcare records without divulging them to every hospital you visit.

Picture transferring money across borders, instantly and securely, without banks taking a hefty cut.

Envision a world where your online identity isn’t a mosaic scattered across corporate servers, but a unified passport you carry with pride, granting access to services based on your own terms.

This is the promise of blockchain: a future where security isn’t a fragile wall of passwords, but a vibrant ecosystem of trust and empowerment.

Are you ready to leave Password Purgatory behind and step into the Blockchain Oasis? Buckle up, savvy internet user, because the revolution has just begun.

The Downside of Traditional Passwords

Why Are Passwords Failing Us?

In our digital age, passwords are the guardians of our online identities, yet they are failing us in more ways than one. Despite being the first line of defense in digital security, traditional passwords are increasingly becoming the weakest link. Let’s explore why.

The Illusion of Strength in Complexity

Think of the last time you created a password. Chances are, you were prompted to include a mix of letters, numbers, and symbols – the more complex, the better, right?

However, this complexity often leads to passwords that are hard to remember and, ironically, not as secure as we think. Cybersecurity experts have long debunked the myth that complexity equates to security.

In reality, it’s the length and unpredictability of passwords that matter most. But even then, no password is impervious to the sophisticated tactics employed by hackers today.

The Human Factor: Password Fatigue and Security Risks

As humans, we have our limits in remembering complex strings of characters. This leads to password fatigue – the tendency to reuse passwords across multiple accounts for convenience.

It’s like using the same key for your house, car, and office; if one gets lost or stolen, everything is compromised. This common practice significantly heightens the risk of mass data breaches.

Once a hacker cracks one password, they potentially gain access to an entire suite of an individual’s personal and professional digital life.

The Hacker’s Playground: Vulnerabilities in Password-Based Security

Hackers have a plethora of tools at their disposal to breach password-protected accounts. Techniques like brute force attacks, where automated software tries countless combinations until it finds the right one, are surprisingly effective against weak passwords.

Phishing attacks, where users are tricked into revealing their passwords, are increasingly sophisticated and difficult to spot.

Even when we think our passwords are safely stored, large-scale data breaches at major companies reveal that this is not always the case.

The Cost of Password Management and Recovery

The administrative burden of managing and recovering passwords is another often-overlooked downside.

For businesses, the cost of resetting passwords and dealing with security breaches can be astronomical.

For individuals, the time and effort spent managing passwords, coupled with the anxiety of keeping them safe, are a significant mental burden.

Biometric Authentication: A Step Forward but Not a Panacea

The advent of biometric authentication – using fingerprints, facial recognition, or retinal scans – seemed like a promising solution.

However, while biometrics offer convenience, they are not without their flaws. Biometric data, once compromised, cannot be changed like a password.

There are also privacy concerns and the potential for misuse of biometric data by corporations or governments.

Looking Beyond Passwords: The Need for a Paradigm Shift

The limitations of traditional passwords highlight the need for a paradigm shift in digital security.

We need a solution that is both secure and user-friendly, something that doesn’t require us to remember complex strings of characters or put our personal biometrics at risk.

This is where blockchain technology comes into play, offering a new way to authenticate identities and secure data without the pitfalls of traditional passwords.

Conclusion

In conclusion, while passwords have been the cornerstone of digital security for decades, their numerous shortcomings are increasingly apparent in our connected world.

As cyber threats evolve, so must our approach to securing our digital lives.

The future of digital security lies in innovative technologies like blockchain, which promise to offer a more secure, efficient, and user-centric approach to protecting our digital identities.

Blockchain: The Game-Changer in Digital Security

How Does Blockchain Enhance Security?

In the digital world, where data breaches and cyber threats loom large, blockchain emerges as a beacon of hope, promising a more secure and trustworthy online environment.

But what exactly makes blockchain a game-changer in digital security? Let’s unpack this.

The Immutable Ledger: A Foundation of Trust

At its core, blockchain is a distributed ledger technology. Imagine a ledger that is not maintained by a single entity but is spread across a network of computers, each holding a copy of the ledger.

This decentralization is key to blockchain’s power. Once a record is added to the blockchain, altering it is next to impossible.

This immutability provides a foundation of trust and security unprecedented in digital transactions.

Decentralization: The Antidote to Centralized Risk

Centralization in traditional digital systems creates a single point of failure, a treasure trove for cybercriminals. Blockchain disrupts this by distributing data across a vast network, thereby diluting the risk.

Even if a part of the network is compromised, the rest remains unscathed, preserving the integrity of the entire system.

Cryptography: The Art of Secret Keeping

Blockchain employs advanced cryptography to secure the data.

Each block in the chain is secured with a cryptographic hash, a mathematical algorithm that turns data into a unique string of characters. Any change in the data alters the hash dramatically.

This means that tampering with a block would require altering all subsequent blocks, an almost Herculean task given the computational power required.

Transparency and Anonymity: A Balancing Act

Blockchain strikes a unique balance between transparency and anonymity.

While all transactions are visible to everyone in the network, the identities of the parties involved are protected.

This transparency ensures accountability and trust, while anonymity safeguards user privacy.

Smart Contracts: The Protocols of Trust

Smart contracts, self-executing contracts with the terms of the agreement directly written into code, are a revolutionary aspect of blockchain.

They automate and enforce contractual obligations, reducing the need for intermediaries and the risk of fraud or manipulation.

Beyond Cryptocurrency: Diverse Applications of Blockchain in Security

Blockchain’s potential extends far beyond the realms of cryptocurrency.

In digital identity verification, it offers a secure way to manage and authenticate identities without centralized databases.

In supply chain management, it ensures the authenticity and traceability of products. In voting systems, it can provide secure and transparent electoral processes.

Challenges in Blockchain Adoption

Despite its potential, blockchain’s adoption faces hurdles.

Scalability, energy consumption, and the integration with existing systems are significant challenges. Furthermore, regulatory uncertainties and a lack of widespread understanding of the technology hinder its broader acceptance.

Preparing for a Blockchain-Enabled Future

As we stand on the brink of a blockchain revolution in digital security, it’s essential to educate ourselves about this technology.

Businesses and individuals alike must stay informed about the latest developments in blockchain and explore how it can be integrated into their digital practices.

Conclusion

Blockchain technology offers a new paradigm in digital security, promising a more secure, transparent, and efficient way to safeguard digital assets and identities.

As we move forward, embracing this technology and overcoming its challenges will be key to building a safer digital world.

The Mechanics of Blockchain Security

Understanding the Nuts and Bolts

Blockchain security is often hailed as a groundbreaking innovation, but how does it really work? What makes it so secure and reliable?

Let’s dive into the mechanics of blockchain security and understand what sets it apart.

The Anatomy of a Blockchain

Picture a blockchain as a series of digital ‘blocks’ linked together in a chain.

Each block contains a set of transactions or data, securely encrypted.

Every time a new block is added, it is verified by multiple nodes (computers) in the network, making fraud or alteration extremely difficult.

The Power of Decentralization

Decentralization is the heartbeat of blockchain security.

Unlike traditional systems where data is stored on central servers, blockchain distributes data across a network of nodes.

This means there’s no single point of failure, making it incredibly resistant to cyber-attacks and data breaches.

Cryptography: The Backbone of Blockchain Security

Blockchain uses advanced cryptographic techniques. Each block contains a unique cryptographic hash of the previous block, creating a secure link.

Altering any information would change the hash, alerting the network to the tampering. This cryptographic chaining ensures the integrity and chronological order of the blockchain.

Consensus Protocols: Ensuring Network Agreement

Blockchain operates on consensus protocols, rules that dictate how transactions are verified and added to the block.

Popular protocols like Proof of Work (PoW) and Proof of Stake (PoS) ensure that all nodes in the network agree on the state of the ledger, preventing fraudulent transactions.

Smart Contracts: Automating Trust

Smart contracts on the blockchain automatically execute transactions when predetermined conditions are met.

These contracts run on code and eliminate the need for intermediaries, reducing the risk of manipulation and increasing efficiency.

Challenges and Limitations

Despite its strengths, blockchain isn’t without challenges.

Issues like scalability, energy consumption (especially with PoW), and integrating blockchain into existing systems remain significant hurdles.

There’s also the concern of ‘51% attacks’ in some blockchains, where if more than half the network’s computing power is controlled by one entity, they could potentially manipulate the network.

Conclusion

Blockchain security offers a robust and innovative approach to protecting digital information.

Its combination of decentralization, cryptography, and consensus protocols provides a level of security far beyond traditional methods.

As the technology matures and overcomes its current limitations, blockchain stands to revolutionize how we secure our digital world.

Blockchain vs. Passwords: A Comparative Analysis

Why Blockchain Wins

In the digital security arena, blockchain and traditional passwords represent two very different philosophies.

While passwords have been the cornerstone of digital security for decades, blockchain introduces a paradigm shift.

Let’s compare these two to understand why blockchain is emerging as the superior option.

Traditional Passwords: The Aging Security Guard

Think of traditional passwords as an aging security guard. They’ve been around for a long time, are familiar, but they’re not as effective as they used to be.

Passwords are vulnerable to a range of attacks – from brute force to phishing – and they rely heavily on human memory and behavior, which can be fallible.

Blockchain: The Digital Fort Knox

On the other hand, blockchain can be likened to a digital Fort Knox. It doesn’t rely on single-key entry like passwords.

Instead, it offers a distributed, immutable ledger with complex cryptographic techniques.

This makes blockchain inherently more secure, as altering any part of the chain would require an astronomical amount of computing power.

User Experience: Complexity vs. Simplicity

One of the biggest drawbacks of passwords is their complexity and the user fatigue they cause. In contrast, blockchain can streamline the user experience.

With blockchain, users can potentially have a single digital identity for multiple platforms, eliminating the need to remember numerous passwords.

Decentralization: Eliminating Single Points of Failure

Passwords often involve centralized databases, creating single points of failure. Blockchain distributes data across a vast network, significantly reducing this risk.

Even if a part of the network is compromised, the rest remains secure, safeguarding the overall integrity.

Recovery and Revocation: A Clear Winner

Losing a password can lead to a cumbersome recovery process. Blockchain offers a more resilient approach.

For instance, with decentralized identity solutions, users can recover their identity through distributed mechanisms, without relying on a central authority.

Scalability and Adoption Challenges

Despite its advantages, blockchain faces its own set of challenges, particularly in scalability and widespread adoption.

Integrating blockchain into existing systems and ensuring it can handle large volumes of transactions are areas that need addressing.

Conclusion

Comparing blockchain with traditional passwords illustrates why blockchain is poised to be the future of digital security.

Its decentralized nature, enhanced security features, and user-friendly potential make it a formidable tool against cyber threats.

As blockchain technology overcomes its current challenges, it could render traditional passwords obsolete, ushering in a new era of digital security.

Real-World Applications of Blockchain in Security

Blockchain in Action

The realm of blockchain extends far beyond the confines of cryptocurrency.

Its unique attributes are being harnessed in various sectors, revolutionizing the way we think about and implement digital security.

Let’s explore some of the most impactful real-world applications of blockchain technology.

Revolutionizing Digital Identity Verification

One of the most significant applications of blockchain is in digital identity verification.

Traditional methods of identity verification are fraught with risks – from data breaches to identity theft.

Blockchain introduces a decentralized approach, where users can control their digital identities without relying on a central authority. This method not only enhances security but also offers greater privacy and control to individuals.

Transforming Supply Chain Management

In supply chain management, blockchain is a game-changer.

It provides a transparent and tamper-proof record of transactions and movements of goods.

This transparency ensures the authenticity of products, combats counterfeit goods, and enhances trust among consumers and businesses alike. From farm to table or manufacturer to retailer, every step is verifiable and secure.

Secure Voting Systems

Blockchain is making strides in securing electoral processes.

By leveraging blockchain, voting systems can become more secure, transparent, and resistant to tampering. This application could revolutionize democracy, making elections more accessible and trustworthy.

Healthcare Data Management

In healthcare, blockchain can securely manage patient records, ensuring privacy and data integrity.

It provides a secure platform for sharing medical records between authorized individuals and institutions, improving the efficiency and accuracy of diagnoses and treatments.

Challenges and Considerations

While these applications are promising, challenges such as scalability, regulatory compliance, and integration with existing systems remain.

There is also a need for a broader understanding and acceptance of blockchain technology among the general public and within specific industries.

Conclusion

The real-world applications of blockchain in security demonstrate its potential to revolutionize how we manage and protect digital information.

As the technology evolves and these challenges are addressed, blockchain is set to play a pivotal role in shaping a more secure and efficient digital world.

Overcoming the Challenges of Implementing Blockchain

Tackling the Obstacles

Blockchain technology, despite its potential, is not without its challenges.

Implementing blockchain in existing systems and processes requires navigating a complex landscape of technical, regulatory, and practical hurdles.

Let’s delve into these challenges and explore how they can be overcome.

Scalability: The Growing Pains of Blockchain

One of the primary challenges facing blockchain is scalability.

As the number of transactions on a blockchain increases, so does the need for greater computational power and storage.

This can lead to slower transaction times and higher costs, making it less practical for large-scale applications.

Solutions like sharding, where the blockchain is divided into smaller, more manageable pieces, and layer 2 solutions that process transactions off the main chain, are being explored to address this issue.

Energy Consumption: A Sustainable Dilemma

Blockchain, especially those using Proof of Work (PoW) consensus mechanisms, can be energy-intensive.

This raises concerns about the environmental impact of blockchain technologies.

Transitioning to more energy-efficient consensus mechanisms like Proof of Stake (PoS) or exploring renewable energy sources for mining operations are ways to mitigate this issue.

Regulatory Hurdles: Navigating the Legal Landscape

The decentralized and often borderless nature of blockchain poses significant regulatory challenges. Different countries have varying regulations regarding digital transactions, data privacy, and cryptocurrency.

Ensuring compliance while fostering innovation requires a delicate balance and ongoing dialogue between technology providers, users, and regulatory bodies.

Integration with Existing Systems

Integrating blockchain technology with existing digital infrastructures can be complex. Compatibility issues, the need for technical expertise, and the disruption of established processes can pose significant barriers.

Developing user-friendly blockchain solutions and providing education and training can facilitate smoother integration.

Security Concerns: Addressing Vulnerabilities

While blockchain is inherently secure, it is not immune to risks.

Potential security issues, such as 51% attacks, smart contract vulnerabilities, and key management challenges, need to be addressed.

Continuous research and development, alongside robust security protocols, are essential to bolster blockchain security.

Conclusion

Overcoming the challenges of implementing blockchain is crucial for realizing its full potential.

This involves addressing scalability and energy consumption issues, navigating regulatory landscapes, integrating with existing systems, and bolstering security measures.

As we tackle these obstacles, blockchain’s promise in transforming digital security becomes increasingly attainable.

The Future of Blockchain in Personal Security

What Lies Ahead

The horizon of personal security is being redrawn by blockchain technology.

As we edge further into the digital era, blockchain is poised to play a critical role in shaping our digital identities and safeguarding our online activities.

Let’s explore the promising future of blockchain in personal security.

Blockchain as a Guardian of Digital Identities

Imagine a world where your digital identity is as unique and unforgeable as your DNA.

Blockchain makes this possible. With its ability to create tamper-proof digital identities, blockchain is setting the stage for a future where identity theft and fraud become relics of the past.

These blockchain-based identities could replace traditional login credentials, offering a more secure and convenient way to access online services.

Enhanced Privacy and Control Over Personal Data

In an age where data breaches are commonplace, blockchain offers a beacon of hope. It empowers individuals with greater control over their personal data.

Instead of entrusting personal information to multiple organizations, blockchain allows individuals to store their data securely and share it selectively, using cryptographic keys.

Decentralized Finance (DeFi) and Personal Wealth Management

The rise of decentralized finance (DeFi) is another area where blockchain is set to transform personal security. By removing intermediaries, DeFi platforms offer more control and security over personal financial transactions.

Blockchain’s transparency and security features make it an ideal backbone for these platforms, promising a more secure and equitable financial ecosystem.

Challenges and Opportunities in Personal Security

The journey towards a blockchain-powered future is not without challenges.

Issues like public acceptance, technological literacy, and regulatory frameworks need to be addressed.

However, these challenges also present opportunities for innovation, collaboration, and education in the realm of personal security.

Conclusion

As blockchain technology matures, its potential to redefine personal security is immense.

From securing digital identities to revolutionizing personal finance, blockchain stands as a pillar of trust and security in the digital world.

Embracing this technology could lead us to a future where our digital lives are more secure, private, and under our control.

How to Prepare for the Blockchain Revolution

Getting Ready for Change

The blockchain revolution is not just coming; it’s already here. But how do we prepare for this seismic shift in digital security?

Whether you’re an individual, a business leader, or a technology enthusiast, gearing up for the blockchain era requires a proactive approach. Here’s how to get ready.

Educating Yourself and Your Team

Knowledge is power, especially when it comes to emerging technologies. Start by educating yourself about blockchain technology.

Online courses, webinars, and industry conferences are great resources. If you’re leading a team or an organization, consider facilitating blockchain training sessions to stay ahead of the curve.

Evaluating Blockchain Applications in Your Life or Business

Look at how blockchain could apply to your personal life or your business operations.

This might involve exploring blockchain-based solutions for data storage, identity verification, or even investment opportunities in the blockchain space.

Participating in Blockchain Communities and Networks

Engagement with blockchain communities can provide invaluable insights and keep you updated on the latest trends and developments.

Online forums, social media groups, and local meetups can serve as platforms for discussion and networking.

Experimenting with Blockchain Technologies

Hands-on experience is one of the best ways to understand blockchain.

Consider experimenting with blockchain applications, such as using a blockchain-based service or investing in cryptocurrencies.

This practical exposure can demystify the technology and reveal its potential applications in your life.

Understanding the Regulatory Landscape

Stay informed about the regulatory environment surrounding blockchain technology, especially if you’re considering it for business applications.

Keeping abreast of legal and compliance issues is crucial to navigating the blockchain space successfully.

Conclusion

Preparing for the blockchain revolution involves education, experimentation, and engagement with the blockchain community.

By taking these steps, individuals and businesses can position themselves to harness the benefits of blockchain technology and navigate the challenges it presents.

The future belongs to those who are ready to embrace change and innovate, and blockchain is a field ripe with opportunities for both.

Debunking Myths about Blockchain Security

Separating Fact from Fiction

Blockchain technology, while revolutionary, is often shrouded in myths and misconceptions, especially regarding its security aspects.

It’s crucial to separate fact from fiction to understand the true capabilities and limitations of blockchain security. Let’s debunk some common myths.

Myth 1: Blockchain is Inherently Invulnerable

Fact: While blockchain’s design makes it highly secure, it’s not infallible. Issues like code vulnerabilities in smart contracts and potential 51% attacks on certain types of blockchains can pose risks.

However, compared to traditional databases, blockchain’s decentralized and cryptographic nature makes it significantly more secure against common cyber threats.

Myth 2: All Blockchains are Public and Transparent

Fact: Not all blockchains are created equal.

While public blockchains offer transparency and decentralization, private blockchains control access more strictly, offering privacy and efficiency.

The choice between public and private blockchains depends on the specific needs and context of use.

Myth 3: Blockchain and Cryptocurrency are Interchangeable

Fact: Cryptocurrencies like Bitcoin are just one application of blockchain technology.

Blockchain has a myriad of other applications beyond digital currencies, from supply chain management to digital identity verification.

Myth 4: Blockchain Transactions are Always Anonymous

Fact: Anonymity in blockchain transactions varies. While cryptocurrencies like Bitcoin offer a degree of anonymity, other blockchains provide full transparency.

Moreover, advanced techniques can sometimes trace blockchain transactions, challenging the notion of absolute anonymity.

Myth 5: Blockchain is a Data Privacy Solution

Fact: Blockchain can enhance data security, but it doesn’t automatically guarantee data privacy.

The way data is recorded and encrypted on the blockchain plays a crucial role in determining privacy levels.

Conclusion

Understanding the realities of blockchain security is vital for its effective implementation and trust.

By debunking these myths, we can appreciate the true potential and limitations of blockchain, paving the way for informed and effective use of this transformative technology.

The Ultimate Guide to Blockchain Security Tools

Your Toolkit for the Future

As blockchain technology becomes increasingly prevalent, the importance of understanding and utilizing blockchain security tools grows.

Whether you’re a blockchain enthusiast, a business professional, or someone curious about the technology, having a grasp of these tools is essential.

Here’s a guide to some key blockchain security tools.

Cryptographic Wallets: Secure Storage for Digital Assets

Cryptographic wallets are essential for securely storing and managing digital assets like cryptocurrencies.

They use private keys, a form of cryptography, to enable users to access and transact their assets securely.

Choosing a reputable wallet, whether it’s a software, hardware, or paper wallet, is crucial for asset security.

Smart Contract Auditing Tools

As smart contracts become more complex, the need for thorough auditing increases.

Tools like Mythril and OpenZeppelin provide security analysis of smart contracts, identifying vulnerabilities and ensuring that they behave as intended.

Blockchain Explorers: Tracking Transactions

Blockchain explorers are tools that allow users to view and track transactions on a blockchain.

They offer transparency and the ability to verify transactions, which is crucial for trust and security in blockchain ecosystems.

Decentralized Identity Applications

Decentralized identity applications use blockchain to provide users with more control over their personal information.

These tools are foundational for creating secure digital identities and can be used for authentication purposes without the need for centralized databases.

API Security Tools

APIs are vital for blockchain applications, and securing them is paramount.

Tools like OAuth 2.0, OpenID Connect, and API gateways help secure and manage access to blockchain-based APIs, protecting against unauthorized access and data breaches.

Conclusion

The landscape of blockchain security tools is vast and evolving. Familiarizing oneself with these tools is a step towards harnessing the full potential of blockchain technology.

As blockchain continues to grow and integrate into various sectors, these tools will become instrumental in securing and optimizing blockchain applications.

Final Conclusion

As we journey through the intricate world of blockchain technology and its impact on digital security, it becomes evident that we are standing on the brink of a major technological revolution.

From debunking myths about blockchain security to exploring an array of advanced security tools, our expedition reveals a future where blockchain is poised to redefine the norms of digital safety and privacy.

This transformative technology, with its decentralized nature, cryptographic security, and innovative applications, offers more than just a new way to secure digital transactions; it promises a future where our digital identities and assets are protected with unprecedented robustness.

Embracing blockchain, understanding its potential, and preparing for its widespread adoption are crucial steps towards a safer, more secure digital world.

As we continue to explore and innovate in this exciting field, the possibilities for enhanced digital security seem limitless.

FAQs

Is blockchain technology applicable in everyday life, or is it just for tech experts?

Blockchain technology is increasingly becoming applicable in everyday life, not just for tech experts. Its applications range from secure online transactions and digital identity management to supply chain tracking and voting systems, making it relevant and beneficial for the general public.

Can blockchain be used to secure all kinds of digital data, or is it limited to financial transactions?

Blockchain can secure various kinds of digital data, not just financial transactions. Its applications include securing personal data, healthcare records, digital identities, and more, demonstrating its versatility beyond just financial uses.

How does blockchain technology offer more security compared to traditional security methods?

Blockchain technology offers more security through its decentralized structure, which eliminates single points of failure, and its use of advanced cryptography, which ensures data integrity and prevents unauthorized alterations.

Are there any significant environmental concerns associated with blockchain technology?

Yes, certain blockchain implementations, particularly those using Proof of Work (PoW) consensus mechanisms, can be energy-intensive and raise environmental concerns. However, newer technologies like Proof of Stake (PoS) are more energy-efficient and are being adopted to address these concerns.

How can individuals ensure they are using blockchain technology safely and effectively?

Individuals can ensure safe and effective use of blockchain technology by educating themselves about its basics, using reputable blockchain services and wallets, staying informed about security best practices, and being cautious of scams and fraudulent schemes in the blockchain space.

Verified Source References

- Blockchain Technology Fundamentals: IBM Blockchain Essentials

- Understanding Cryptographic Wallets: Investopedia – Cryptocurrency Wallets

- Guide to Smart Contract Auditing Tools: Ethereum Smart Contract Best Practices

- Blockchain Explorers and Their Use: Blockgeeks – Blockchain Explorers

- Decentralized Identity Applications in Blockchain: Decentralized Identity Foundation

Artifiсiаl Intelligenсe

Smart Cities 101: Pioneering the Future of Urban Living

Smart Cities 101: Pioneering the Future of Urban Living

In an era where urbanization is rapidly reshaping our world, the concept of ‘smart cities’ has emerged as a beacon of hope and innovation.

But what exactly constitutes a smart city?

Simply put, a smart city uses digital technology and data-driven strategies to enhance the quality of life for its inhabitants, streamline urban services, and create sustainable, efficient urban environments.

This comprehensive guide delves into the essence of smart cities, exploring their components, benefits, challenges, and real-world applications.

As pioneers in urban living, smart cities represent more than just a technological leap; they symbolize a fundamental shift in how we conceptualize and interact with our urban environments.

In the following sections, we will uncover the layers that make a city ‘smart’, examine case studies of successful smart cities around the globe, and discuss the future trajectory of this revolutionary concept.

Whether you’re a city planner, technology enthusiast, or just a curious reader, this article will provide you with a deep understanding of smart cities and their role in shaping our future.

Understanding Smart Cities: An Overview

What is a Smart City?

At its core, a smart city employs a range of digital technologies to collect data, which is then used to manage assets, resources, and services efficiently.

This data comes from citizens, devices, buildings, and assets, and is processed and analyzed to monitor and manage traffic and transportation systems, power plants, water supply networks, waste, crime detection, information systems, schools, libraries, hospitals, and other community services.

The Key Components of a Smart City

A smart city comprises several components, including:

- Advanced Connectivity: Robust and widespread internet access forms the backbone of a smart city.

- Data Analytics: Using big data and AI to make informed decisions and improve city services.

- Sustainability: Emphasis on eco-friendly practices and reducing the city’s carbon footprint.

- Citizen Engagement: Using technology to foster a closer relationship between the government and its citizens.

The Benefits of Smart Cities

Enhanced Quality of Life

One of the primary advantages of smart cities is the improved quality of life they offer. By optimizing city functions and promoting sustainable development, residents enjoy cleaner air, reduced traffic congestion, and more efficient public services.

Economic Growth and Innovation

Smart cities are hotbeds for innovation and economic growth. They attract businesses and investors, fostering a dynamic economic environment.

Challenges in Developing Smart Cities

Data Privacy and Security Concerns

While smart cities offer numerous benefits, they also raise concerns about data privacy and security. Ensuring the safety and privacy of citizens’ data is paramount.

Infrastructure and Investment Hurdles

Developing the necessary infrastructure for smart cities requires significant investment and planning. Overcoming these hurdles is crucial for the successful implementation of smart cities.

Case Studies: Smart Cities in Action

Let’s look at some real-world examples of smart cities:

- Singapore: Often hailed as one of the smartest cities globally, Singapore uses technology to improve everything from sanitation to traffic management.

- Barcelona: Known for its innovative use of technology in urban planning and services.

The Future of Smart Cities

As technology continues to evolve, so will the concept of smart cities. The integration of AI, IoT, and other emerging technologies will further enhance the efficiency and effectiveness of urban areas.

Sample Section: The Evolution of Smart Cities

The concept of a ‘smart city’ is not a recent phenomenon. It has evolved over decades, adapting to the changing needs of urban populations and technological advancements.

The roots of smart cities can be traced back to the urban development movements of the late 20th century, where the focus was on creating more efficient and livable urban spaces.

However, the advent of the internet and digital technology in the late 1990s and early 2000s marked a significant shift in this evolution.

The early 2000s saw the introduction of concepts like the ‘digital city’ or ‘cybercity,’ where the internet began to play a crucial role in urban planning.

Cities started to adopt digital technologies for better governance, planning, and public services.

This era witnessed the initial steps towards integrating information and communication technology (ICT) into urban infrastructure, leading to more efficient city management and citizen services.

However, it was not until the late 2000s and early 2010s that the term ‘smart city’ began to gain widespread recognition.

This period marked a shift from mere digitalization to a more holistic approach encompassing sustainability, citizen well-being, and intelligent management of city resources.

The integration of technologies like the Internet of Things (IoT), cloud computing, and big data analytics transformed the way cities operated and interacted with their citizens.

Today, smart cities are at the forefront of urban planning, integrating advanced technologies to create more sustainable, efficient, and livable urban environments.

They represent the culmination of years of technological advancement and urban development philosophy, standing as testaments to human ingenuity in the face of growing urban challenges.

Key Components of a Smart City

Smart cities are characterized by several key components that work together to create a more efficient and sustainable urban environment.

Advanced Connectivity

The foundation of a smart city is its connectivity. High-speed internet and the Internet of Things (IoT) are crucial for enabling real-time data collection and communication between devices and city infrastructure.

This connectivity allows for the seamless operation of various city services, including transportation systems, energy grids, and public safety networks.

The integration of 5G technology is set to further revolutionize this aspect, offering faster speeds and more reliable connections.

Data Analytics and AI

Data is the lifeblood of a smart city. Through the collection and analysis of data from sensors, cameras, and other sources, city administrators can gain valuable insights into urban life.

Artificial Intelligence (AI) plays a pivotal role in processing this data, helping to optimize traffic flow, reduce energy consumption, and improve public services.

Predictive analytics can also be used to anticipate and mitigate urban challenges, such as traffic congestion and crime.

Sustainability Practices

Sustainability is a core principle of smart cities.

The use of green technology, renewable energy sources, and eco-friendly materials is not just about reducing the environmental impact but also about creating a healthier living space for residents.

Smart cities aim to reduce their carbon footprint through efficient energy use, sustainable transportation systems, and waste reduction initiatives.

Citizen Engagement and Governance

A smart city is not just about technology; it’s also about the people who live in it. Engaging citizens in the governance process is crucial for the success of a smart city.

Digital platforms that allow residents to report issues, provide feedback, and participate in decision-making processes help create a sense of community and ensure that the city’s development meets the needs of its inhabitants.

Benefits of Smart Cities

Smart cities offer a multitude of benefits that can significantly improve the quality of urban living.

Improved Quality of Life

By optimizing city functions, smart cities can provide a higher standard of living for their residents.

This includes reduced traffic congestion, better air quality, more efficient public transportation, and quicker response times to emergencies.

The use of smart technologies also enhances public services, making them more accessible and efficient.

Economic Growth and Innovation

Smart cities are engines of economic growth and innovation. By attracting tech companies and startups, these cities become hotspots for technological advancements.

The emphasis on digital infrastructure and sustainable practices makes smart cities attractive to businesses and investors, leading to job creation and economic diversification.

Enhanced Public Safety and Security

With advanced surveillance systems and data analytics, smart cities can significantly improve public safety and security.

Real-time monitoring and predictive policing can help in preventing crimes and quickly responding to emergencies, creating a safer environment for residents.

Environmental Benefits and Sustainability

Smart cities play a crucial role in promoting environmental sustainability.

Through efficient energy use, sustainable urban planning, and waste management systems, these cities can significantly reduce their ecological footprint, contributing to the fight against climate change.

Challenges and Considerations

Despite their numerous benefits, smart cities also face several challenges and considerations that need to be addressed.

Data Privacy and Security Concerns

The vast amount of data collected and processed in smart cities raises concerns about privacy and security.

Ensuring the protection of citizen data against breaches and misuse is a significant challenge that requires robust cybersecurity measures and clear data governance policies.

High Costs and Infrastructure Challenges

Building and maintaining the infrastructure of a smart city requires substantial investment. Upgrading existing urban frameworks to support new technologies can be costly and complex.

Additionally, ensuring the continuous operation and maintenance of these systems presents ongoing financial and logistical challenges.

Digital Divide and Ensuring Inclusivity

There is a risk that smart cities could exacerbate the digital divide.

Ensuring that all citizens, regardless of their socio-economic background, have equal access to the benefits of smart city technologies is essential for preventing inequality and social exclusion.

Governance and Regulatory Challenges

Developing the regulatory framework to manage and govern smart cities is another significant challenge. Policymakers need to establish clear guidelines and standards for data usage, technology deployment, and public-private partnerships.

Global Case Studies: Smart Cities in Action

Examining real-world examples provides valuable insights into how smart cities operate and the impact they have on urban living.

Singapore: A Model Smart City

Singapore is often cited as one of the most successful examples of a smart city.

The city-state has implemented an extensive range of smart technologies to enhance living conditions and operational efficiency.

Key initiatives include intelligent transportation systems, which have significantly reduced traffic congestion and pollution, and smart water management systems that ensure sustainable water usage.

Singapore’s Smart Nation initiative showcases the power of technology in transforming urban environments.

Barcelona: Innovating Through Technology

Barcelona has gained recognition for its innovative use of technology in city management.

The city’s smart initiatives include the installation of sensors to monitor parking and traffic, smart lighting to conserve energy, and digitalized waste management systems.

These efforts have not only improved urban services but have also fostered economic growth, positioning Barcelona as a hub for technological innovation.

Other Notable Examples

Other cities around the globe are also embracing smart technologies.

For instance, Stockholm’s focus on eco-friendly solutions has made it a leader in sustainable urban living. Amsterdam’s smart city projects emphasize citizen participation and open data.

Dubai’s Smart City initiative aims to make the city one of the most technologically advanced and happiest in the world.

The Future of Smart Cities

As we look towards the future, the evolution of smart cities is intertwined with technological advancements and changing urban needs.

Emerging Technologies and Their Impact

The integration of new technologies such as 5G, advanced AI, and the Internet of Things (IoT) will further enhance the capabilities of smart cities.

These technologies will enable faster, more reliable communication, improved data analysis, and greater automation of city services.

The potential for innovation is immense, from self-driving vehicles to AI-driven urban planning.

Smart Cities in Developing vs. Developed Countries

The concept of smart cities is not limited to developed nations.

Developing countries also have the opportunity to leverage smart technologies, potentially leapfrogging traditional development stages.

In these regions, smart city initiatives can address critical challenges like rapid urbanization, resource management, and service delivery.

Predictions for the Next Decade

In the next decade, we can expect smart cities to become more integrated, with interconnected systems working seamlessly to improve urban life.

There will likely be a greater emphasis on sustainability and resilience, especially in the face of climate change and growing environmental concerns.

The role of citizens in shaping smart city initiatives will also become more prominent, with increased focus on participatory governance and community engagement.

Conclusion

Smart cities represent the frontier of urban development, offering a blend of technology, sustainability, and enhanced quality of life.

While they present significant opportunities for innovation and improvement, challenges such as data privacy, inclusivity, and governance must be addressed.

As we continue to evolve and expand the concept of smart cities, they will undoubtedly play a pivotal role in shaping the future of urban living.

FAQs

- How do smart cities improve daily life? Smart cities enhance daily life through efficient public services, reduced traffic congestion, improved environmental quality, and increased safety.

- What role does technology play in smart cities? Technology is central to smart cities, enabling data collection, analysis, and the automation of services for better city management.

- Can smart cities help in environmental conservation? Yes, by implementing sustainable practices and technologies, smart cities can play a significant role in environmental conservation and reducing carbon emissions.

- Are smart cities expensive to implement? While initial investments can be high, the long-term benefits and efficiencies often justify the costs.

- How can citizens contribute to the development of smart cities? Citizens can contribute by participating in governance, providing feedback on services, and adopting smart technologies in their daily lives.

References

Now that we have completed the main sections of the article, let’s list some potential references.

These references provide additional information and credibility to the article. Remember, when writing your full article, it’s important to fact-check and cite sources accurately.

- Smart Cities Council

https://smartcitiescouncil.com/

An extensive resource offering insights, news, and case studies on smart city initiatives worldwide. - IEEE Smart Cities

https://smartcities.ieee.org/

A technical resource that provides a wealth of information on the technological aspects of smart cities, including research papers and industry news. - McKinsey & Company: Smart Cities Report

https://www.mckinsey.com/industries/public-and-social-sector/our-insights/smart-cities-digital-solutions-for-a-more-livable-future

This report provides a comprehensive analysis of how digital solutions are improving the quality of life in urban areas. - The Smart City Journal

https://www.thesmartcityjournal.com/en/

Offers a range of articles, reports, and interviews on various aspects of smart city development. - World Economic Forum: Shaping the Future of Cities

https://www.weforum.org/platforms/shaping-the-future-of-cities

This platform discusses the strategic insights and trends shaping the future of cities, including smart city initiatives. - United Nations – Sustainable Development Goals

https://sdgs.un.org/goals

The UN’s Sustainable Development Goals provide a framework for understanding the broader objectives that smart cities can help achieve. - CityLab from Bloomberg

https://www.bloomberg.com/citylab

Provides news, analysis, and solutions for metropolitan leaders and urban residents. - The Guardian – Cities Section

https://www.theguardian.com/cities

Offers a range of journalistic articles and insights on urban planning and smart city initiatives. - Smart Cities Dive

https://www.smartcitiesdive.com/

A digital publication focusing on industry news, trends, and analysis in the smart cities sector. - European Commission – Smart Cities

https://ec.europa.eu/digital-single-market/en/smart-cities

Provides information on EU policies, projects, and funding related to smart cities.

Artifiсiаl Intelligenсe

Web3 Revolution: Decentralize Everything and Rewrite the Rules

Web3 Revolution: Decentralize Everything and Rewrite the Rules

In an era where digital innovation is not just a trend but a necessity, the emergence of Web3 is revolutionizing how we perceive and interact with the internet.

The journey from Web1, a read-only web, to Web2, characterized by interactivity and social media, has been transformative.

Now, Web3 is poised to take a giant leap forward, advocating for a decentralized, blockchain-based internet, promising to enhance user autonomy and disrupt traditional power structures.

But why is decentralization so crucial, and how will it impact society at large?

Web3 is not just a technological upgrade; it’s a paradigm shift. It’s about redistributing power from centralized authorities to the edges of the network, to the users themselves.

This shift has profound implications for everything from finance to personal identity, challenging the status quo and offering a more inclusive and equitable digital future.

As we delve deeper into the world of Web3, we uncover key concepts like Decentralized Finance (DeFi), Non-fungible Tokens (NFTs), and decentralized social media platforms that are not just buzzwords but harbingers of a new digital era.

These innovations are rewriting the rules of engagement, ownership, and participation in the digital realm.

However, the path to a fully decentralized world is not without its challenges. Issues like scalability, security, and regulatory compliance pose significant hurdles.

Moreover, the environmental impact of blockchain technologies and the digital divide are concerns that need urgent addressing.

As we embark on this journey through the article, we will explore the multifaceted aspects of Web3 and decentralization, analyzing their potential, challenges, and the future they promise.

Join us in uncovering the depths of this digital revolution and envision a future where the internet is not just a tool, but an extension of our collective will, decentralized and democratic.

Understanding Web3 and Decentralization

The journey from Web1 to Web3 marks a significant evolution in the internet’s history.

Initially, Web1 offered a static experience, where users were mere consumers of content. The transition to Web2 brought interactivity, with platforms like social media allowing users to be both creators and consumers.

However, this era also centralized power in the hands of a few large tech companies, raising concerns about privacy, data ownership, and control.

Enter Web3, the next internet frontier, built on the principles of decentralization and blockchain technology.

It promises to return control and ownership back to the users. In this new paradigm, data is distributed across a blockchain network, ensuring transparency, security, and resistance to censorship.

Key technologies driving Web3 include blockchain, which provides a decentralized ledger for transparent and tamper-proof record-keeping; smart contracts, which are self-executing contracts with the terms directly written into code; cryptocurrencies, digital or virtual currencies secured by cryptography, and decentralized applications (dApps), which run on a blockchain network instead of being hosted on centralized servers.

Decentralization is not just a technological advancement; it’s a societal shift.

By democratizing access and control over the internet, Web3 has the potential to reduce the power imbalances seen in today’s digital landscape.

It opens up new opportunities for individual empowerment, privacy protection, and equitable participation in the digital economy. However, realizing this potential requires overcoming technical, regulatory, and societal challenges inherent in such a transformative shift.

Evolution from Web1 to Web3

The internet’s evolution is a story of constant change and innovation.

In its earliest form, Web1, the internet was a collection of static webpages, akin to a digital encyclopedia. This era was defined by limited user interaction and content creation, dominated by read-only websites.

Then came Web2, an interactive and social web, where users became creators. Platforms like Facebook, YouTube, and Twitter facilitated unprecedented levels of participation, collaboration, and information sharing.

However, this era also saw the rise of centralization, with a few large companies controlling vast swathes of the online landscape.

Enter Web3, a paradigm shift towards a decentralized internet. This new era is built on the backbone of blockchain technology, offering a decentralized, transparent, and user-centric experience.

Web3 represents a significant departure from the monopolized structure of Web2, emphasizing user sovereignty and data privacy. It’s an internet where users are not just consumers but also owners and stakeholders in the platforms they use.

Significance of Decentralization in Society

Decentralization is more than a technological concept; it’s a movement towards redistributing power and control.

In a decentralized system, there is no central point of control or failure. This architecture has profound implications for society, especially in terms of data ownership, privacy, and security.

Decentralization challenges the status quo of how data is managed, stored, and utilized, offering a more democratic model where users have greater control over their information.

This shift has the potential to remodel various societal structures, from finance to governance. Decentralization promises to reduce the influence of intermediaries, lower barriers to entry, and foster a more equitable distribution of resources and opportunities.

It’s about creating a more balanced digital ecosystem where the benefits of technology are accessible to all, not just a select few.

Key Concepts and Technologies in Web3

Web3 is underpinned by several key concepts and technologies:

- Blockchain: At the heart of Web3 is blockchain technology, a distributed ledger that records transactions across many computers, ensuring transparency, security, and immutability.

- Smart Contracts: These are self-executing contracts with the terms of the agreement directly written into code, enabling trustless and automated transactions.

- Cryptocurrencies and Tokens: Digital currencies and assets play a crucial role in Web3, facilitating transactions and incentivizing network participation.

- Decentralized Applications (dApps): These applications run on a decentralized network, rather than a single computer, enhancing security and resistance to censorship.

These technologies together form the backbone of the Web3 ecosystem, providing a foundation for a new internet era where trust, transparency, and user empowerment are paramount.

The Most Radical Decentralized Projects Changing the World

Decentralized projects in the Web3 space are not just technological innovations; they are redefining entire industries and societal structures.

Decentralized Finance (DeFi)

DeFi is reimagining the financial system by leveraging blockchain technology to create a more open, accessible, and transparent financial ecosystem.

Unlike traditional finance, DeFi operates without central financial intermediaries such as banks, exchanges, or insurance companies. It utilizes smart contracts on blockchains, predominantly Ethereum, to execute financial transactions, offering services like lending, borrowing, and trading in a trustless environment.

This innovation enables anyone with an internet connection to access financial services, bypassing geographical and socio-economic barriers.

DeFi’s potential to democratize finance is immense. It offers an alternative to those underserved by the traditional banking system, opening up possibilities for financial inclusion on a global scale.

However, the nascent nature of DeFi also brings challenges like high volatility, regulatory uncertainty, and security risks.

Non-fungible Tokens (NFTs)

NFTs have captured the world’s attention as a revolutionary way to represent ownership and authenticity of unique digital items using blockchain technology.

Unlike cryptocurrencies, NFTs are not interchangeable; each token is unique and holds distinct value. Initially popular in the art world, they allow artists to monetize digital works in ways previously impossible, offering proof of ownership and provenance.

The implications of NFTs extend beyond art into areas like real estate, gaming, and digital identity.

They represent a significant shift in how we understand ownership and value in the digital realm, challenging traditional models of intellectual property and asset management.

Decentralized Identity Solutions

Decentralized identity solutions seek to return control of personal data to individuals. Leveraging blockchain technology, these solutions enable users to create and manage their digital identities without relying on centralized authorities.

This approach enhances privacy, reduces the risk of data breaches, and gives users control over how their personal information is shared and used.

In a world increasingly concerned with data privacy and security, decentralized identity solutions offer an alternative to the centralized models of digital identity management, often prone to misuse and exploitation.

Decentralized Social Media and Content Platforms

Decentralized social media and content platforms aim to address the challenges of censorship, privacy, and data control prevalent in traditional, centralized platforms.

By leveraging blockchain technology, these platforms allow users to control their data, monetize their content, and engage in a more transparent and equitable online environment.

These platforms challenge the existing social media paradigm, offering a vision of the internet where freedom of expression and user empowerment are central.

Decentralized Computing and Storage Solutions

Decentralized computing and storage solutions, such as IPFS and Filecoin, offer an alternative to centralized cloud storage and computing services.

These decentralized networks allow users to store and access data across a distributed network of nodes, enhancing security, privacy, and resistance to censorship.

By decentralizing data storage and computation, these solutions not only offer a more robust and secure infrastructure but also challenge the monopolistic hold of big tech companies over data storage and computing resources.

The Challenges and Risks of Decentralization

While the promise of Web3 and decentralization is immense, it’s not without its challenges and risks.

Scalability and Performance Issues

One of the most significant challenges facing decentralized technologies is scalability. Blockchain networks, in particular, struggle to handle large volumes of transactions quickly and efficiently.

This limitation impacts the user experience and hinders the widespread adoption of decentralized applications.

Solutions like layer-2 scaling (e.g., Lightning Network for Bitcoin, Plasma and Rollups for Ethereum) and alternative consensus mechanisms (e.g., Proof of Stake) are being developed.

However, achieving scalability without compromising security and decentralization remains a complex challenge.

Security Concerns and Potential Attacks

The decentralized nature of blockchain and related technologies brings unique security challenges.

Smart contracts, despite their potential, are susceptible to bugs and vulnerabilities, which can lead to significant losses. The decentralized autonomous organizations (DAOs) are not immune to attacks, as seen in various high-profile cases.

Ensuring the security of decentralized networks and applications requires constant vigilance, innovative security solutions, and a community-driven approach to identify and address vulnerabilities.

Regulatory Hurdles and Legal Considerations

The decentralized world exists in a regulatory grey area. Many countries are still grappling with how to regulate cryptocurrencies, DeFi, NFTs, and other decentralized technologies.

The lack of clear regulations creates uncertainty for investors, developers, and users.

Regulators face the challenge of protecting consumers and preventing illicit activities while not stifling innovation in this rapidly evolving space.

Finding a balance between regulation and innovation is key to the sustainable growth of decentralized technologies.

Environmental Impact and Sustainability

The environmental impact of certain blockchain technologies, especially those relying on energy-intensive Proof of Work (PoW) consensus mechanisms, is a growing concern.

The high energy consumption associated with mining activities, primarily for cryptocurrencies like Bitcoin, has led to debates about the sustainability of these technologies.

In response, there is a growing shift towards more energy-efficient consensus mechanisms like Proof of Stake (PoS).

The industry is also exploring renewable energy sources and more sustainable practices to mitigate the environmental impact.

The Digital Divide and Equitable Access

While decentralized technologies offer numerous benefits, there’s a risk that they could exacerbate the digital divide.

Access to these technologies requires not only internet connectivity but also a certain level of technical expertise and financial resources.

Ensuring equitable access to the benefits of decentralization is essential. This includes making these technologies more user-friendly, affordable, and accessible to people across different socio-economic backgrounds.

The Future of Web3 and Decentralization

The future of Web3 and decentralization holds immense possibilities, but it also presents new challenges and questions.

Emerging Trends and Innovations

The field of Web3 and decentralization is rapidly evolving, with new trends and innovations emerging regularly.

Interoperability between different blockchain networks is a key focus, allowing for seamless interaction between various decentralized applications and services.

Advancements in blockchain scalability, such as sharding and new consensus algorithms, are also critical for the future growth of decentralized networks.

These innovations will enable more efficient, scalable, and sustainable decentralized systems.

The Role of AI in Shaping the Decentralized World

AI has the potential to significantly impact the decentralized world. AI-driven analytics can enhance the functionality of decentralized applications, providing insights and optimizing network operations.

Smart contracts, powered by AI, could automate complex processes and decision-making, further enhancing the efficiency and capabilities of decentralized systems.

The integration of AI and blockchain could lead to more intelligent, autonomous, and efficient decentralized networks. However, this convergence also raises new ethical and security considerations that need to be addressed.

Societal Implications and Ethical Considerations

The shift towards a more decentralized internet raises important societal and ethical questions.

Issues like data privacy, equitable access, and the redistribution of economic and social power are at the forefront.

As decentralized technologies become more prevalent, it’s essential to consider their impact on society.

This includes ensuring that the benefits of these technologies are accessible to all and that they are used in a way that promotes fairness, equity, and social good.

Vision for a More Inclusive and Decentralized Future

The ultimate vision of Web3 and decentralization is a more inclusive, equitable, and user-empowered internet.

This vision encompasses greater financial inclusion through DeFi, enhanced personal privacy and control through decentralized identity solutions, and new opportunities for creators and innovators.

Achieving this vision will require not only technological advancements but also a concerted effort to address the societal and ethical implications of these technologies.

It’s about building a digital ecosystem that is open, transparent, and accessible to all.

Conclusion

As we delve into the intricacies of Web3 and its decentralized ethos, it’s clear that we are standing at the cusp of a digital revolution.

This new internet age, characterized by blockchain, smart contracts, and decentralized applications, is not just a technological leap but a societal one.

It offers a glimpse into a future where power is not hoarded by a few but distributed among many, where privacy is a right, not a privilege, and where digital identity is controlled by the individual, not corporations.

The road ahead for Web3 and decentralization is fraught with challenges.

Technical hurdles like scalability and security, legal and regulatory ambiguities, and concerns about environmental sustainability and the digital divide must be navigated carefully.

However, the potential benefits— a more inclusive, equitable, and user-empowered digital world — are immense.

The Web3 revolution is not just about technology; it’s about reimagining and reshaping the very fabric of the internet and, by extension, society. It’s about building a digital ecosystem that is open, transparent, and accessible to all, regardless of geography or background.

As we move forward, it will be crucial for developers, users, regulators, and all stakeholders to work collaboratively to overcome the challenges and realize the full potential of this new decentralized world.

In this journey, every individual has a role to play, whether as a developer, a content creator, an investor, or simply as an informed and engaged user.

The future of the internet is not just being written; it’s being decentralized. And in this decentralization lies the promise of a more democratic, equitable, and innovative digital future.

FAQs

What is Web3?